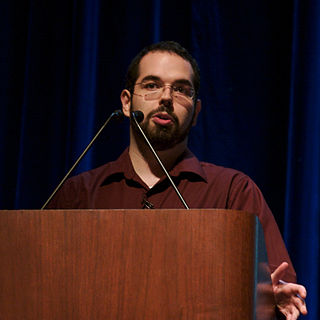

Top 113 Quotes & Sayings by Eliezer Yudkowsky

Explore popular quotes and sayings by an American writer Eliezer Yudkowsky.

Last updated on April 14, 2025.

The purest case of an intelligence explosion would be an Artificial Intelligence rewriting its own source code. The key idea is that if you can improve intelligence even a little, the process accelerates. It's a tipping point. Like trying to balance a pen on one end - as soon as it tilts even a little, it quickly falls the rest of the way.

Textbook science is beautiful! Textbook science is comprehensible, unlike mere fascinating words that can never be truly beautiful. Elementary science textbooks describe simple theories, and simplicity is the core of scientific beauty. Fascinating words have no power, nor yet any meaning, without the math.

The systematic experimental study of reproducible errors of human reasoning, and what these errors reveal about underlying mental processes, is known as the heuristics and biases program in cognitive psychology. This program has made discoveries highly relevant to assessors of global catastrophic risks.

The media thinks that only the cutting edge of science, the very latest controversies, are worth reporting on. How often do you see headlines like 'General Relativity still governing planetary orbits' or 'Phlogiston theory remains false'? By the time anything is solid science, it is no longer a breaking headline.

Do not flinch from experiences that might destroy your beliefs. The thought you cannot think controls you more than thoughts you speak aloud. Submit yourself to ordeals and test yourself in fire. Relinquish the emotion which rests upon a mistaken belief, and seek to feel fully that emotion which fits the facts.

If I could create a world where people lived forever, or at the very least a few billion years, I would do so. I don't think humanity will always be stuck in the awkward stage we now occupy, when we are smart enough to create enormous problems for ourselves, but not quite smart enough to solve them.

If dragons were common, and you could look at one in the zoo - but zebras were a rare legendary creature that had finally been decided to be mythical - then there's a certain sort of person who would ignore dragons, who would never bother to look at dragons, and chase after rumors of zebras. The grass is always greener on the other side of reality. Which is rather setting ourselves up for eternal disappointment, eh? If we cannot take joy in the merely real, our lives shall be empty indeed.

You will find ambiguity a great ally on your road to power. Give a sign of Slytherin on one day, and contradict it with a sign of Gryffindor the next; and the Slytherins will be enabled to believe what they wish, while the Gryffindors argue themselves into supporting you as well. So long as there is uncertainty, people can believe whatever seems to be to their own advantage. And so long as you appear strong, so long as you appear to be winning, their instincts will tell them that their advantage lies with you. Walk always in the shadow, and light and darkness both will follow.

My experience is that journalists report on the nearest-cliche algorithm, which is extremely uninformative because there aren't many cliches, the truth is often quite distant from any cliche, and the only thing you can infer about the actual event was that this was the closest cliche. It is simply not possible to appreciate the sheer awfulness of mainstream media reporting until someone has actually reported on you. It is so much worse than you think.

Reality has been around since long before you showed up. Don't go calling it nasty names like 'bizarre' or 'incredible'. The universe was propagating complex amplitudes through configuration space for ten billion years before life ever emerged on Earth. Quantum physics is not 'weird'. You are weird.

Crocker's Rules didn't give you the right to say anything offensive, but other people could say potentially offensive things to you, and it was your responsibility not to be offended. This was surprisingly hard to explain to people; many people would read the careful explanation and hear, "Crocker's Rules mean you can say offensive things to other people."

- Every time someone cries out in prayer and I can't answer, I feel guilty about not being God. - That doesn't sound good. - I understand that I have a problem, and I know what I need to do to solve it, all right? I'm working on it. Of course, Harry hadn't said what the solution was. The solution, obviously, was to hurry up and become God.