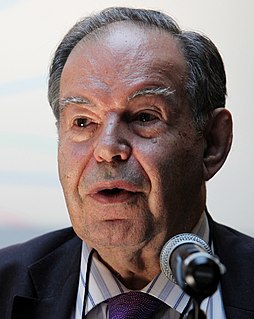

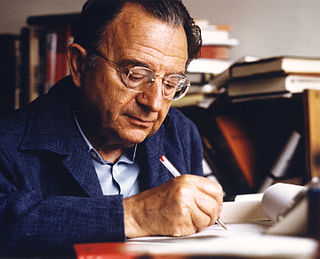

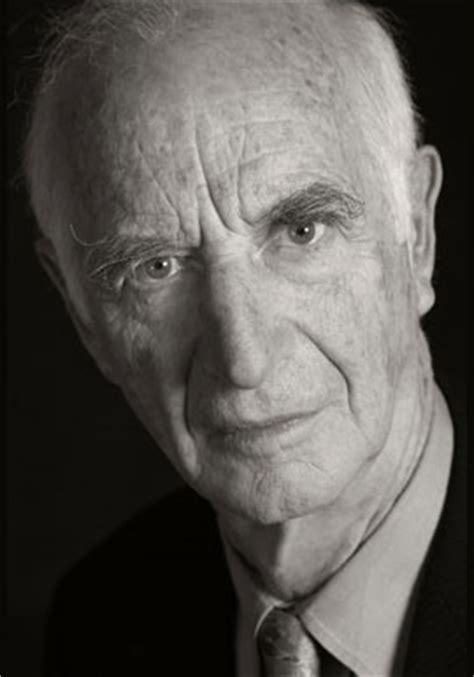

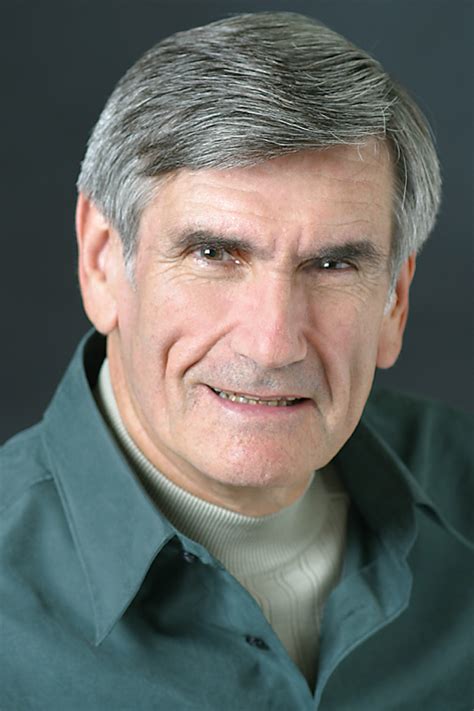

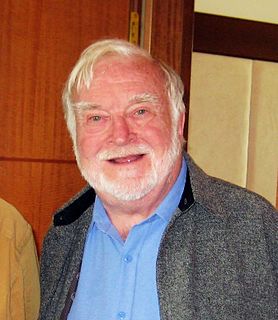

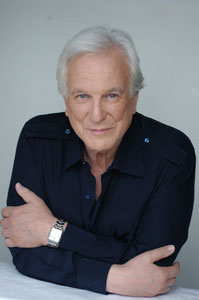

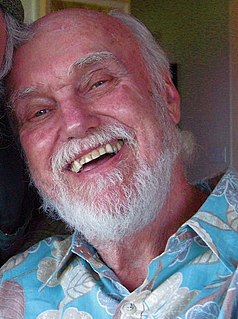

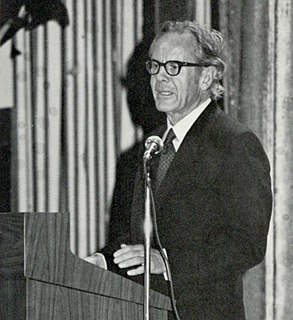

Top 53 Quotes & Sayings by Geoffrey Hinton

Explore popular quotes and sayings by a British psychologist Geoffrey Hinton.

Last updated on April 14, 2025.

Most people in AI, particularly the younger ones, now believe that if you want a system that has a lot of knowledge in, like an amount of knowledge that would take millions of bits to quantify, the only way to get a good system with all that knowledge in it is to make it learn it. You are not going to be able to put it in by hand.

I had a stormy graduate career, where every week we would have a shouting match. I kept doing deals where I would say, 'Okay, let me do neural nets for another six months, and I will prove to you they work.' At the end of the six months, I would say, 'Yeah, but I am almost there. Give me another six months.'