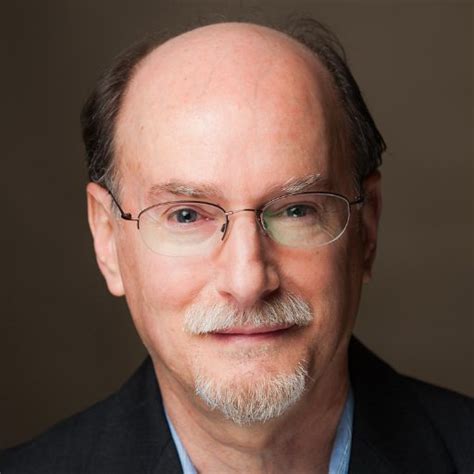

Top 22 Quotes & Sayings by Matt Blaze

Explore popular quotes and sayings by an American researcher Matt Blaze.

Last updated on April 18, 2025.

The perspective that law enforcement is presenting seems to be a very narrow one that's focused very, very heavily on investigations of past crimes rather than on preventing future crimes. It's very important for policymakers to take that broader view because they're the ones who are trusted to look at the big picture.

It may be true that encryption makes certain investigations of crime more difficult. It can close down certain investigative techniques or make it harder to get access to certain kinds of electronic evidence. But it also prevents crime by making our computers, our infrastructure, our medical records, our financial records, more robust against criminals. It prevents crime.

If it were possible to hold onto this sort of database and really be assured that only good guys get access to it, we might have a different discussion. Unfortunately, we don't know how to build systems that work that way. We don't know how to do this without creating a big target and a big vulnerability.

I think it's interesting because the 1990s ended with the government pretty much giving up. There was a recognition that encryption was important. In 2000, the government considerably loosened the export controls on encryption technology and really went about actively encouraging the use of encryption rather than discouraging it.

There's been a certain amount of opportunism in the wake of the Paris attacks in 2015, when there was almost a reflexive assumption that, "Oh, if only we didn't have strong encryption out there, these attacks could have been prevented." But, as more evidence has come out - and we don't know all the facts yet - we're seeing very little to support the idea that the Paris attackers were making any kind of use of encryption.

When the September 11th attacks happened, only about a year later, the crypto community was holding its breath because here was a time when we just had an absolutely horrific terrorist attack on U.S. soil, and if the NSA and the FBI were unhappy with anything, Congress was ready to pass any law they wanted. The PATRIOT Act got pushed through very, very quickly with bipartisan support and very, very little debate, yet it didn't include anything about encryption.

I believed then, and continue to believe now, that the benefits to our security and freedom of widely available cryptography far, far outweigh the inevitable damage that comes from its use by criminals and terrorists. I believed, and continue to believe, that the arguments against widely available cryptography, while certainly advanced by people of good will, did not hold up against the cold light of reason and were inconsistent with the most basic American values.

Clipper took a relatively simple problem, encryption between two phones, and turned it into a much more complex problem, encryption between two phones but that can be decrypted by the government under certain conditions and, by making the problem that complicated, that made it very easy for subtle flaws to slip by unnoticed. I think it demonstrated that this problem is not just a tough public policy problem, but it's also a tough technical problem.

Telephone handsets are particularly in need of built-in security. We have almost every aspect of our personal and work lives reflected on them and we lose them all the time. We leave them in taxis. We leave them on airplanes. The consequences of one of these devices falling into the wrong hands are very, very serious.

So, in 1993, in what was probably the first salvo of the first Crypto War, there was concern coming from the National Security Agency and the FBI that encryption would soon be incorporated into lots of communications devices, and that that would cause wiretaps to go dark. There was not that much commercial use of encryption at that point. Encryption, particularly for communications traffic, was mostly something done by the government.

If we try to prohibit encryption or discourage it or make it more difficult to use, we're going to suffer the consequences that will be far reaching and very difficult to reverse, and we seem to have realized that in the wake of the September 11th attacks. To the extent there is any reason to be hopeful, perhaps that's where we'll end up here.

From a policymaker's point of view, [the back door] must look like a perfect solution. "We'll hold onto a separate copy of the keys, and we'll try to keep them really, really safe so that only in an emergency and if it's authorized by a court will we bring out those keys and use them." And, from a policy point of view, when you describe it that way, who could be against that?

As we build systems that are more and more complex, we make more and more subtle but very high-impact mistakes. As we use computers for more things and as we build more complex systems, this problem of unreliability and insecurity is actually getting worse, with no real sign of abating anytime soon.