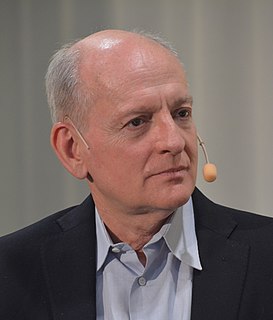

Top 29 Quotes & Sayings by Stuart J. Russell

Explore popular quotes and sayings by a British scientist Stuart J. Russell.

Last updated on April 14, 2025.

Some people think that, inevitably, every robot that does any task is a bad thing for the human race, because it could be taking a job away. But that isn't necessarily true. You can also think of the robot as making a person more productive and enabling people to do things that are currently economically infeasible. But a person plus a robot or a fleet of robots could do things that would be really useful.

You're able to use a search engine, like Google or Bing or whatever. But those engines don't understand anything about pages that they give you; they essentially index the pages based on the words that you're searching, and then they intersect that with the words in your query, and they use some tricks to figure out which pages are more important than others. But they don't understand anything.

What AI could do is essentially be a power tool that magnifies human intelligence and gives us the ability to move our civilization forward. It might be curing disease, it might be eliminating poverty. Certainly it should include preventing environmental catastrophe. If AI could be instrumental to all those things, then I would feel it was worthwhile.

If you had a system that could read all the pages and understand the context, instead of just throwing back 26 million pages to answer your query, it could actually answer the question. You could ask a real question and get an answer as if you were talking to a person who read all those millions and billions of pages, understood them, and synthesized all that information.

We call ourselves Homo sapiens--man the wise--because our intelligence is so important to us. For thousands of years, we have tried to understand how we think: that is, how a mere handful of matter can perceive, understand, predict, and manipulate a world far larger and more complicated than itself. The field of artificial intelligence, or AI, goes further still: it attempts not just to understand but also to build intelligent entities.