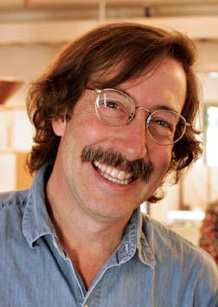

A Quote by Ro Khanna

We should have companies required to get the consent of individuals before collecting their data, and we should have as individuals the right to know what's happening to our data and whether it's being transferred.

Related Quotes

One of the myths about the Internet of Things is that companies have all the data they need, but their real challenge is making sense of it. In reality, the cost of collecting some kinds of data remains too high, the quality of the data isn't always good enough, and it remains difficult to integrate multiple data sources.

Traditional companies have been subject to licensing for many years. Attention utilities should be required to obey limits on data extraction and message amplification practices that drive polarisation, and should be required to protect children. We should ban or limit microtargeting of advertising, recommendations and other behavioural nudges.

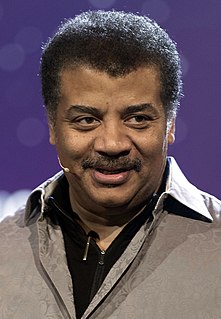

Any time scientists disagree, it's because we have insufficient data. Then we can agree on what kind of data to get; we get the data; and the data solves the problem. Either I'm right, or you're right, or we're both wrong. And we move on. That kind of conflict resolution does not exist in politics or religion.

When dealing with data, scientists have often struggled to account for the risks and harms using it might inflict. One primary concern has been privacy - the disclosure of sensitive data about individuals, either directly to the public or indirectly from anonymised data sets through computational processes of re-identification.

Everyone knows, or should know, that everything we type on our computers or say into our cell phones is being disseminated throughout the datasphere. And most of it is recorded and parsed by big data servers. Why do you think Gmail and Facebook are free? You think they're corporate gifts? We pay with our data.

We get more data about people than any other data company gets about people, about anything - and it's not even close. We're looking at what you know, what you don't know, how you learn best. The big difference between us and other big data companies is that we're not ever marketing your data to a third party for any reason.

Scientific data are not taken for museum purposes; they are taken as a basis for doing something. If nothing is to be done with the data, then there is no use in collecting any. The ultimate purpose of taking data is to provide a basis for action or a recommendation for action. The step intermediate between the collection of data and the action is prediction.

The big thing that's happened is, in the time since the Affordable Care Act has been going on, our medical science has been advancing. We have now genomic data. We have the power of big data about what your living patterns are, what's happening in your body. Even your smartphone can collect data about your walking or your pulse or other things that could be incredibly meaningful in being able to predict whether you have disease coming in the future and help avert those problems.