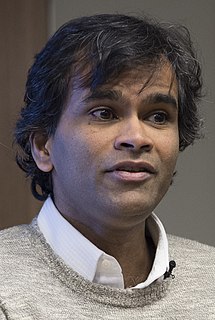

A Quote by Sendhil Mullainathan

The problem with data is that it says a lot, but it also says nothing. 'Big data' is terrific, but it's usually thin. To understand why something is happening, we have to engage in both forensics and guess work.

Related Quotes

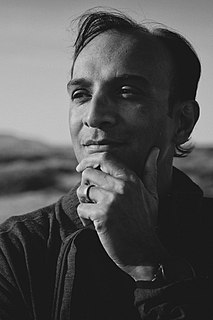

Let's look at lending, where they're using big data for the credit side. And it's just credit data enhanced, by the way, which we do, too. It's nothing mystical. But they're very good at reducing the pain points. They can underwrite it quicker using - I'm just going to call it big data, for lack of a better term: "Why does it take two weeks? Why can't you do it in 15 minutes?"

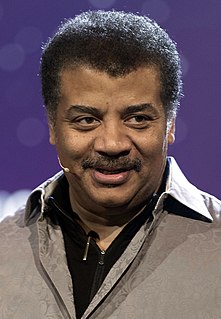

Any time scientists disagree, it's because we have insufficient data. Then we can agree on what kind of data to get; we get the data; and the data solves the problem. Either I'm right, or you're right, or we're both wrong. And we move on. That kind of conflict resolution does not exist in politics or religion.

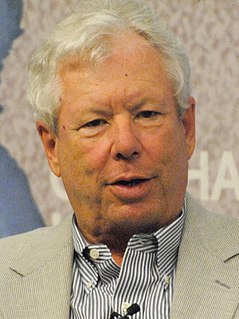

Disruptive technology is a theory. It says this will happen and this is why; it's a statement of cause and effect. In our teaching we have so exalted the virtues of data-driven decision making that in many ways we condemn managers only to be able to take action after the data is clear and the game is over. In many ways a good theory is more accurate than data. It allows you to see into the future more clearly.

Scientific data are not taken for museum purposes; they are taken as a basis for doing something. If nothing is to be done with the data, then there is no use in collecting any. The ultimate purpose of taking data is to provide a basis for action or a recommendation for action. The step intermediate between the collection of data and the action is prediction.