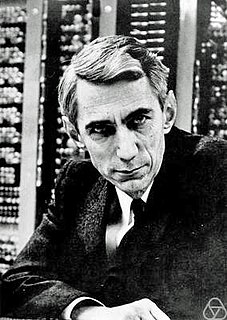

A Quote by William Poundstone

Use "entropy" and you can never lose a debate, von Neumann told Shannon - because no one really knows what "entropy" is.

Related Quotes

My greatest concern was what to call it. I thought of calling it 'information,' but the word was overly used, so I decided to call it 'uncertainty.' When I discussed it with John von Neumann, he had a better idea. Von Neumann told me, 'You should call it entropy, for two reasons. In the first place your uncertainty function has been used in statistical mechanics under that name, so it already has a name. In the second place, and more important, no one really knows what entropy really is, so in a debate you will always have the advantage.'

The fact that you can remember yesterday but not tomorrow is because of entropy. The fact that you're always born young and then you grow older, and not the other way around like Benjamin Button - it's all because of entropy. So I think that entropy is underappreciated as something that has a crucial role in how we go through life.

There was a seminar for advanced students in Zürich that I was teaching and von Neumann was in the class. I came to a certain theorem, and I said it is not proved and it may be difficult. Von Neumann didn't say anything but after five minutes he raised his hand. When I called on him he went to the blackboard and proceeded to write down the proof. After that I was afraid of von Neumann.

John von Neumann gave me an interesting idea: that you don't have to be responsible for the world that you're in. So I have developed a very powerful sense of social irresponsibility as a result of von Neumann's advice. It's made me a very happy man ever since. But it was von Neumann who put the seed in that grew into my active irresponsibility!

It's really hard to design products by focus groups. A lot of times, people don't know what they want until you show it to them. As Henry Ford said many years earlier: "If I had listened to my customers, I would have built a faster horse." Inventions in general express Shannon entropy. They come from the supply side.

Von Neumann languages do not have useful properties for reasoning about programs. Axiomatic and denotational semantics are precise tools for describing and understanding conventional programs, but they only talk about them and cannot alter their ungainly properties. Unlike von Neumann languages, the language of ordinary algebra is suitable both for stating its laws and for transforming an equation into its solution, all within the "language."

Just as entropy is a measure of disorganization, the information carried by a set of messages is a measure of organization. In fact, it is possible to interpret the information carried by a message as essentially the negative of its entropy, and the negative logarithm of its probability. That is, the more probable the message, the less information it gives. Cliches, for example, are less illuminating than great poems.