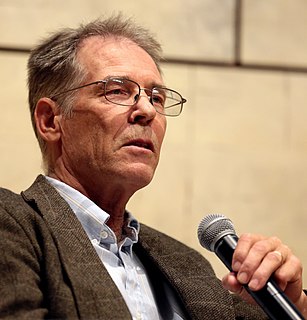

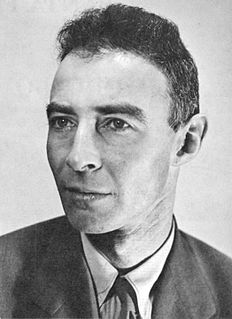

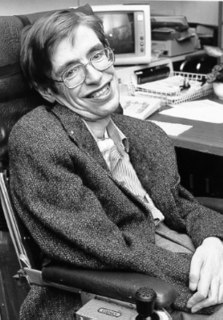

A Quote by Alan Guth

If we assume there is no maximum possible entropy for the universe, then any state can be a state of low entropy.

Related Quotes

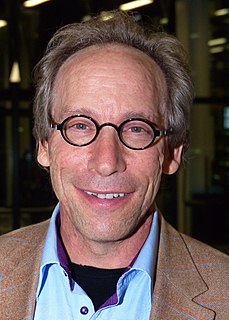

The fact that you can remember yesterday but not tomorrow is because of entropy. The fact that you're always born young and then you grow older, and not the other way around like Benjamin Button - it's all because of entropy. So I think that entropy is underappreciated as something that has a crucial role in how we go through life.

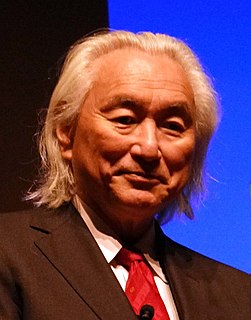

Aging is a staircase - the upward ascension of the human spirit, bringing us into wisdom, wholeness and authenticity. As you may know, the entire world operates on a universal law: entropy, the second law of thermodynamics. Entropy means that everything in the world, everything, is in a state of decline and decay, the arch. There's only one exception to this universal law, and that is the human spirit, which can continue to evolve upwards.

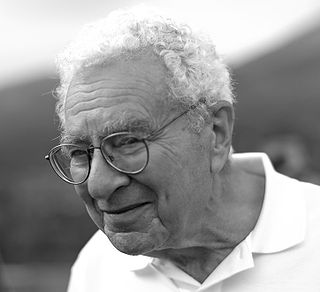

Just as entropy is a measure of disorganization, the information carried by a set of messages is a measure of organization. In fact, it is possible to interpret the information carried by a message as essentially the negative of its entropy, and the negative logarithm of its probability. That is, the more probable the message, the less information it gives. Cliches, for example, are less illuminating than great poems.