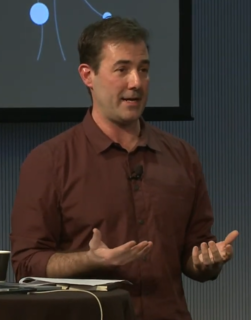

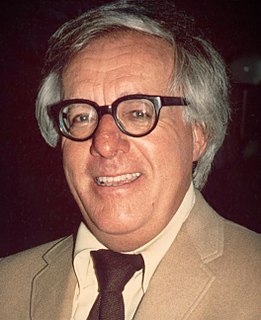

A Quote by Clay Shirky

Algorithms don't do a good job of detecting their own flaws.

Related Quotes

There's never a mistake in the universe. So if your partner is angry, good. If there are things about him that you consider flaws, good, because these flaws are your own, you're projecting them, and you can write them down, inquire, and set yourself free. People go to India to find a guru, but you don't have to: you're living with one. Your partner will give you everything you need for your own freedom.

These algorithms, which I'll call public relevance algorithms, are-by the very same mathematical procedures-producing and certifying knowledge. The algorithmic assessment of information, then, represents a particular knowledge logic, one built on specific presumptions about what knowledge is and how one should identify its most relevant components. That we are now turning to algorithms to identify what we need to know is as momentous as having relied on credentialed experts, the scientific method, common sense, or the word of God.

It's difficult to make your clients understand that there are certain days that the market will go up or down 2%, and it's basically driven by algorithms talking to algorithms. There's no real rhyme or reason for that. So it's difficult. We just try to preach long-term investing and staying the course.