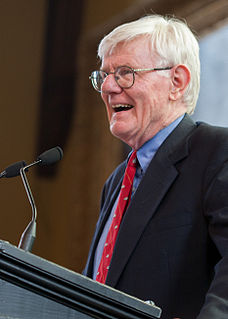

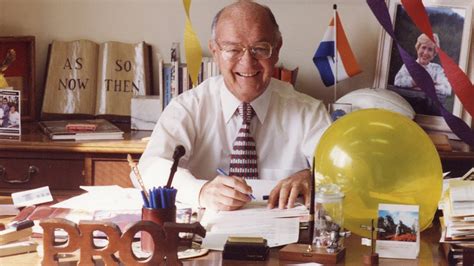

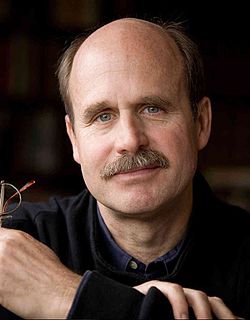

A Quote by Clayton Christensen

Every disruptive innovation is powered by a simplifying technology, and then the technology has to get embedded in a different kind of a business model. The first two decades of digital computing were characterized by the huge mainframe computers that filled a whole room, and they had to be operated by PhD Computer Scientists. It took the engineers at IBM about four years to design these mainframe computers because there were no rules. It was an intuitive art and just by trial and error and experimentation they would evolve to a computer that worked.

Quote Topics

About

Art

Because

Business

Business Model

Computer

Computers

Computing

Decades

Design

Different

Different Kind

Digital

Disruptive

Disruptive Innovation

Embedded

Engineers

Error

Every

Evolve

Experimentation

Filled

First

Four

Four Years

Get

Had

Huge

Ibm

Innovation

Intuitive

Just

Kind

Mainframe

Model

No Rules

PhD

Room

Rules

Scientists

Technology

Then

Took

Trial

Trial And Error

Two

Were

Whole

Worked

Would

Years

Related Quotes

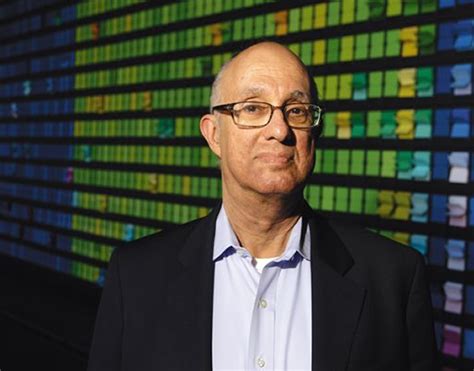

The personal computer was a disruptive innovation relative to the mainframe because it enabled even a poor fool like me to have a computer and use it, and it was enabled by the development of the micro processor. The micro processor made it so simple to design and build a computer that IB could throw in together in a garage. And so, you have that simplifying technology as a part of every disruptive innovation. It then becomes an innovation when the technology is embedded in a different business model that can take the simplified solution to the market in a cost-effective way.

Generally, the technology that enables disruption is developed in the companies that are the practitioners of the original technology. That's where the understanding of the technology first comes together. They usually can't commercialize the technology because they have to couple it with the business model innovation, and because they tend to try to take all of their technologies to market through their original business model, somebody else just picks up the technology and changes the world through the business model innovation.

Right up till the 1980s, SF envisioned giant mainframe computers that ran everything remotely, that ingested huge amounts of information and regurgitated it in startling ways, and that behaved (or were programmed to behave) very much like human beings... Now we have 14-year-olds with more computing power on their desktops than existed in the entire world in 1960. But computers in fiction are still behaving in much the same way as they did in the Sixties. That's because in fiction [artificial intelligence] has to follow the laws of dramatic logic, just like human characters.

Today, your cell phone has more computer power than all of NASA back in 1969, when it placed two astronauts on the moon. Video games, which consume enormous amounts of computer power to simulate 3-D situations, use more computer power than mainframe computers of the previous decade. The Sony PlayStation of today, which costs $300, has the power of a military supercomputer of 1997, which cost millions of dollars.

With both people and computers on the job, computer error can be more quickly tracked down and corrected by people and, conversely, human error can be more quickly corrected by computers. What it amounts to is that nothing serious can happen unless human error and computer error take place simultaneously. And that hardly ever happens.

What’s next for technology and design? A lot less thinking about technology for technology’s sake, and a lot more thinking about design. Art humanizes technology and makes it understandable. Design is needed to make sense of information overload. It is why art and design will rise in importance during this century as we try to make sense of all the possibilities that digital technology now affords.

History is littered with great firms that got killed by disruption. Of course, the personal computer, a technology that first took root as a toy, got Digital Equipment Corporation. Kodak missed the boat for a long time on digital imaging. Sony was slow to get MP3 technology. Microsoft doesn't know what to do with open source software. And so on.