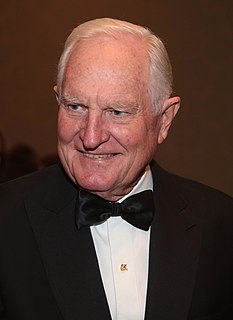

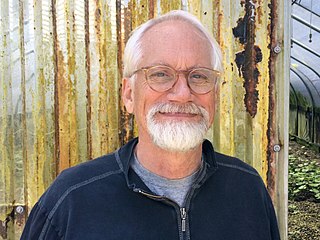

A Quote by Craig R. Barrett

Innovate, integrate, innovate, integrate, that's the way the industry works, ... Graphics was a stand-alone graphics card; then it's going to be a stand-alone graphics chip; and then part of that's going to get integrated into the main CPU.

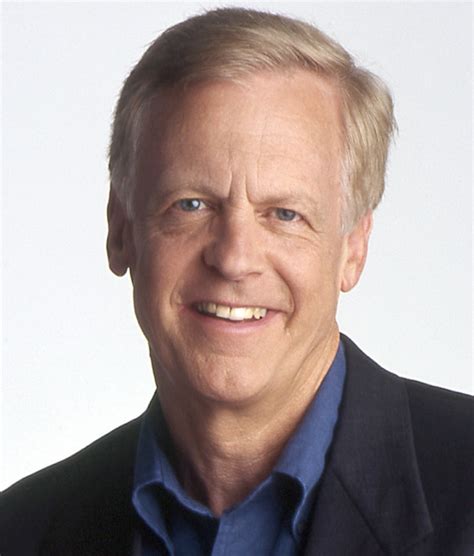

Related Quotes

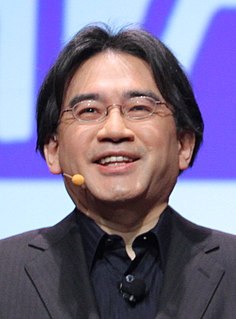

We're also looking a lot at graphics and video. We've done a lot on a deep technical level to make sure that the next version of Firefox will have all sorts of new graphics capabilities. And the move from audio to video is just exploding. So those areas in particular, mobile and graphics and video, are really important to making the Web today and tomorrow as open as it can be.

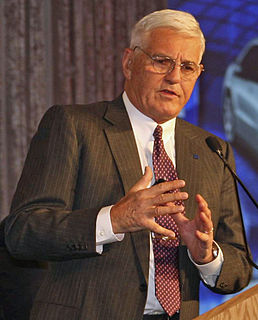

The original AMD GCN architecture allowed for one source of graphics commands, and two sources of compute commands. For PS4, we've worked with AMD to increase the limit to 64 sources of compute commands - the idea is if you have some asynchronous compute you want to perform, you put commands in one of these 64 queues, and then there are multiple levels of arbitration in the hardware to determine what runs, how it runs, and when it runs, alongside the graphics that's in the system.