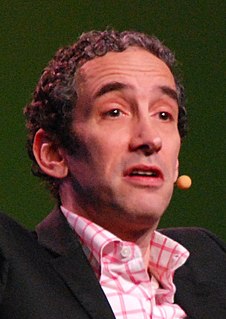

A Quote by Douglas Rushkoff

I think there can be a positive sort of futurism even in a presentist society. But I think it's a kind of futurism that envisions augmenting human ability and intellect rather than creating some artificial machine intelligence that displaces us.

Related Quotes

There is a movement we call Afro-Futurism, where we imagine a black way of life free of white supremacy and bigotry. 'Black Panther,' I think, is the first blockbuster film centered in the ethos of Afro-Futurism, where the writers and directors and makeup and wardrobe team all imagined a beautiful, thriving black Africa without colonialism.

I think whatever nation or whoever develops one artificial intelligence will probably make it so that artificial intelligence always stays ahead of any other developing artificial intelligence at any other point in time. It might even do things like send viruses to a second artificial intelligence, just so it can wipe it out, to protect its grounds. It's gonna be very similar to national politics.

In the field of Artificial Intelligence there is no more iconic and controversial milestone than the Turing Test, when a computer convinces a sufficient number of interrogators into believing that it is not a machine but rather is a human. It is fitting that such an important landmark has been reached at the Royal Society in London, the home of British Science and the scene of many great advances in human understanding over the centuries. This milestone will go down in history as one of the most exciting.

What I advocate for is that, as soon as we get to the point when artificial intelligence can take off and be as smart, or even 10 times more intelligent than us, we stop that research and we have the research of cranial implant technology or the brainwave. And we make that so good so that, when artificial intelligence actually decides - when we actually decide to switch the on-button - human beings will also be a part of that intelligence. We will be merged, basically directly.

I advocate as a futurist and also as a member of the Transhumanist Party, that we never let artificial intelligence completely go on its own. I just don't see why the human species needs an artificial entity, an artificial intelligence entity, that's 10,000 times smarter than us. I just don't see why that could ever be a good thing.

In the future it's very possible you could have an artificial intelligence system that can run the country better than a human being. Because human beings are naturally selfish. Human beings are naturally after their own interests. We are geared towards pursuing our own desires, but oftentimes, those desires have contrasts to the benefit of society, at large, or against the benefit of the greater good. Whereas, if you have a machine, you will be able to program that machine to, hopefully, benefit the greatest good, and really go after that.

It is an odd fact of evolution that we are the only species on Earth capable of creating science and philosophy. There easily could have been another species with some scientific talent, say that of the average human ten-year-old, but not as much as adult humans have; or one that is better than us at physics but worse at biology; or one that is better than us at everything. If there were such creatures all around us, I think we would be more willing to concede that human scientific intelligence might be limited in certain respects.

In 'Chappie,' you see this sort of young robot that's learning through maybe 'deep learning' how to see the world really, look out into the world, and learn step by step. What's so interesting is that with 'Chappie,' you're getting to see how human behavior reacts to artificial intelligence, and I don't think it's always going to be positive.

How Human beings are, that is how the society will be. So, creating human beings who are flexible and willing to look at everything rather than being stuck in their ideas and opinions definitely makes for a different kind of society. And the very energy that such human being carry will influence everything around them.