A Quote by Fei-Fei Li

When I was a graduate student in computer science in the early 2000s, computers were barely able to detect sharp edges in photographs, let alone recognize something as loosely defined as a human face.

Related Quotes

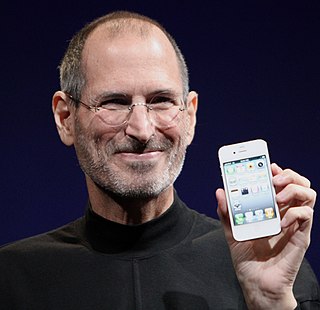

Because computers have memories, we imagine that they must be something like our human memories, but that is simply not true. Computer memories work in a manner alien to human memories. My memory lets me recognize the faces of my friends, whereas my own computer never even recognizes me. My computer's memory stores a million phone numbers with perfect accuracy, but I have to stop and think to recall my own.

There was a golden period that I look back upon with great regret, in which the cheapest of experimental animals were medical students. Graduate students were even better. In the old days, if you offered a graduate student a thiamine-deficient diet, he gladly went on it, for that was the only way he could eat. Science is getting to be more and more difficult.

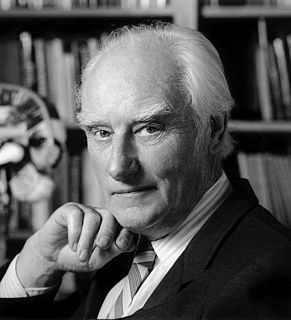

I started doing science when I was effectively 20, a graduate student of Salvador Luria at Indiana University. And that was - you know, it took me about two years, you know, being a graduate student with Luria deciding I wanted to find the structure of DNA; that is, DNA was going to be my objective.

The term "informatics" was first defined by Saul Gorn of University of Pennsylvania in 1983 (Gorn, 1983) as computer science plus information science used in conjunction with the name of a discipline such as business administration or biology. It denotes an application of computer science and information science to the management and processing of data, information and knowledge in the named discipline.

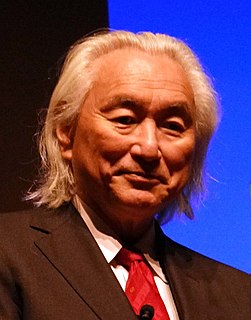

With both people and computers on the job, computer error can be more quickly tracked down and corrected by people and, conversely, human error can be more quickly corrected by computers. What it amounts to is that nothing serious can happen unless human error and computer error take place simultaneously. And that hardly ever happens.