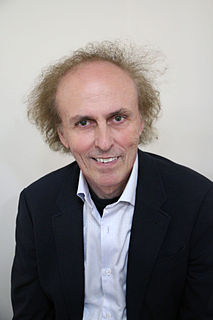

A Quote by Jesse Shera

The computer is here to stay, therefore it must be kept in its proper place as a tool and a slave, or we will become sorcerer's apprentices, with data data everywhere and not a thought to think.

Related Quotes

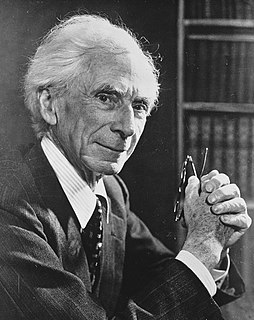

We are ... led to a somewhat vague distinction between what we may call "hard" data and "soft" data. This distinction is a matter of degree, and must not be pressed; but if not taken too seriously it may help to make the situation clear. I mean by "hard" data those which resist the solvent influence of critical reflection, and by "soft" data those which, under the operation of this process, become to our minds more or less doubtful.

EMA research evidences strong and growing interest in leveraging log data across multiple infrastructure planning and operations management use cases. But to fully realize the potential complementary value of unstructured log data, it must be aligned and integrated with structured management data, and manual analysis must be replaced with automated approaches. By combining the RapidEngines capabilities with its existing solution, SevOne will be the first to truly integrate log data into an enterprise-class, carrier-grade performance management system.

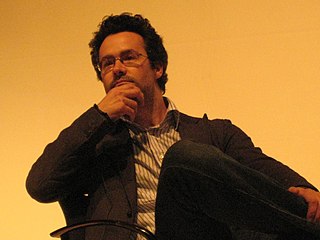

PowerPoint presentations, the cesspool of data visualization that Microsoft has visited upon the earth. PowerPoint, indeed, is a cautionary tale in our emerging data literacy. It shows that tools matter: Good ones help us think well and bad ones do the opposite. Ever since it was first released in 1990, PowerPoint has become an omnipresent tool for showing charts and info during corporate presentations.

You have to imagine a world in which there's this abundance of data, with all of these connected devices generating tons and tons of data. And you're able to reason over the data with new computer science and make your product and service better. What does your business look like then? That's the question every CEO should be asking.

There is so much information that our ability to focus on any piece of it is interrupted by other information, so that we bathe in information but hardly absorb or analyse it. Data are interrupted by other data before we've thought about the first round, and contemplating three streams of data at once may be a way to think about none of them.

One of the myths about the Internet of Things is that companies have all the data they need, but their real challenge is making sense of it. In reality, the cost of collecting some kinds of data remains too high, the quality of the data isn't always good enough, and it remains difficult to integrate multiple data sources.

MapReduce has become the assembly language for big data processing, and SnapReduce employs sophisticated techniques to compile SnapLogic data integration pipelines into this new big data target language. Applying everything we know about the two worlds of integration and Hadoop, we built our technology to directly fit MapReduce, making the process of connectivity and large scale data integration seamless and simple.