A Quote by Kate Crawford

Data will always bear the marks of its history. That is human history held in those data sets.

Related Quotes

When dealing with data, scientists have often struggled to account for the risks and harms using it might inflict. One primary concern has been privacy - the disclosure of sensitive data about individuals, either directly to the public or indirectly from anonymised data sets through computational processes of re-identification.

One of the myths about the Internet of Things is that companies have all the data they need, but their real challenge is making sense of it. In reality, the cost of collecting some kinds of data remains too high, the quality of the data isn't always good enough, and it remains difficult to integrate multiple data sources.

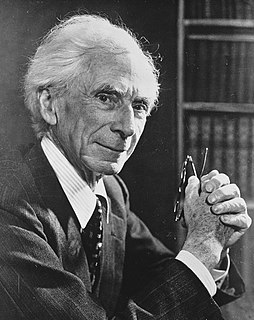

We are ... led to a somewhat vague distinction between what we may call "hard" data and "soft" data. This distinction is a matter of degree, and must not be pressed; but if not taken too seriously it may help to make the situation clear. I mean by "hard" data those which resist the solvent influence of critical reflection, and by "soft" data those which, under the operation of this process, become to our minds more or less doubtful.