A Quote by Kristen Stewart

It's hard to generalize about that subject because the women I've worked with have all been so different. But if there's one consistency, it might be that you do have to handle yourself differently on a set. Women can be more emotional - at least they sometimes show it more.

Related Quotes

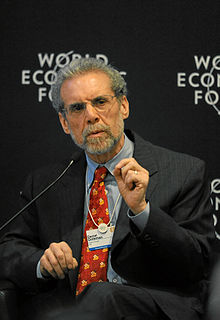

What I find interesting and heartening, though, is that there does seem to be a shift in the subject matter being written about by women that is doing well in the culture. We're seeing more women writing dystopian fiction, more women writing novels set post-apocalyptic settings, subjects and themes that used to be dominated by men.

We had early on women having the right to vote, then women in the workforce during WWII, just going back in history, and then we had the higher education of women, and then women more fully participating in the economy and in business, the professions, education, you name the subject... but the missing link has always been: is there quality, affordable healthcare for all women, regardless of what their family situation might be?

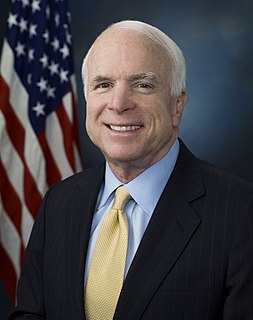

Women need the education and training, particularly since more and more women are heads of their households, as much or more than anybody else...And it's hard for them to leave their families when they don't have somebody to take care of them....It's a vicious cycle that's affecting women, particularly in a part of the country like this, where mining is the mainstay; traditionally, women have not gone into that line of work, to say the least.

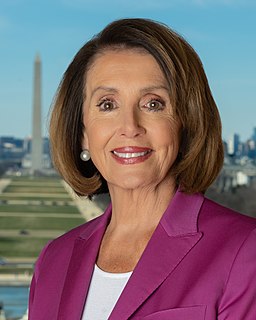

I've been a staunch advocate of women's empowerment, and I've worked hard throughout my career to advance the cause. It is heartening to see that gender equality is really becoming more of a reality. There is still much more to be done, and I'm confident that, by working together, we can empower women worldwide.

There are things that directors know about me that people shouldn't know. But everyone's really different. I've worked with women who I've never wanted to tell anything about myself to, and I've worked with guys who have been pouring wells of emotion. So emotional availability is not a gender-specific thing.

Space travel is a dream for many men and women. I think my trip will be perceived differently by different genders because for women, a lot of time, not only space travel, it's not accessible to everyone, but is even less accessible to women, there are a lot more barriers for them especially if they live in countries where things like space travel, engineering, any science and technology-related field would be considered a more male-dominated field. And so I want to show them that there is nothing preventing woman, or making them less qualified to be involved in any of these fields.

The problem in business isn't that women are overlooked because they are women, it's that most people subconsciously look to employ a mini-me. It's not a gender issue, it's about diversification full stop. It's hard to change that mindset and it hits women particularly hard because men historically have always been the recruiters.

All year there have been these cover stories that the women's movement is dead and about the death of feminism and the post-feminist generation of young women who don't identify with feminism - and then we have the biggest march ever of women in Washington. More people than had ever marched for anything - not only more women, but more people.