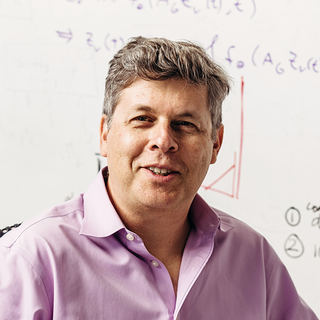

A Quote by Rodney Brooks

I think it's very easy for people who are not deep in the technology itself to make generalizations, which may be a little dangerous. And we've certainly seen that recently with Elon Musk, Bill Gates, Stephen Hawking, all saying AI is just taking off and it's going to take over the world very quickly. And the thing that they share is none of them work in this technological field.

Related Quotes

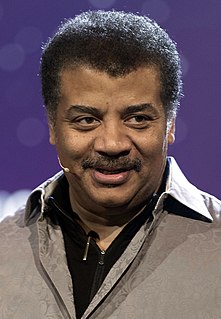

Like people including Stephen Hawking and Elon Musk have predicted, I agree that the future is scary and very bad for people. If we build these devices to take care of everything for us, eventually they'll think faster than us and they'll get rid of the slow humans to run companies more efficiently.

I think whatever is going on with my brain, I'm very, very - and I'm not saying this as a positive thing, it's just a fact - I'm very creative. I have a very strong imagination, and have since I was a little kid. That is where a lot of my world comes from. It's like I'm off somewhere else. And I can have a problem in life because of that, because I'm always off in some other world thinking about something else. It's constant.

Having serious consequences to your decision-making process is something you have to be very comfortable with. It's something you learn and you practise over time, so I encourage people to find some way to challenge themselves. The other thing I share with people, which I've learned over time, is self-confidence. You have to get very comfortable with saying, "Well, every day, I'm just going to give my best. I have skill sets I've learned, I'm going to employ them, and my best is going to be good enough".

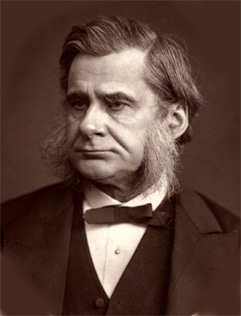

The saying that a little knowledge is a dangerous thing is, to my mind, a very dangerous adage. If knowledge is real and genuine, I do not believe that it is other than a very valuable posession, however infinitesimal its quantity may be. Indeed, if a little knowledge is dangerous, where is a man who has so much as to be out of danger?

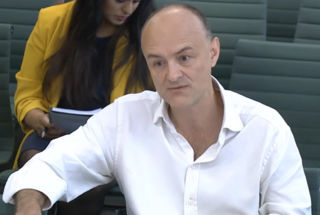

You can't just stop technological progress. Even if one country stops researching artificial intelligence, some other countries will continue to do it. The real question is what to do with the technology. You can use exactly the same technology for very different social and political purposes. So I think people shouldn't be focused on the question of how to stop technological progress because this is impossible. Instead the question should be what kind of usage to make of the new technology. And here we still have quite a lot of power to influence the direction it's taking.

The key strengths of civilizations are also their central weaknesses. You can see that from the fact that the golden ages of civilizations are very often right before the collapse. The Renaissance in Italy was very much like the Classic Maya. The apogee was the collapse. The Golden Age of Greece was the same thing. We see this pattern repeated continuously, and it is one that should make us nervous. I just heard Bill Gates say that we are living in the greatest time in history. Now you can understand why Bill Gates would think that, but even if he is right, that is an ominous thing to say.

There's a few historical reasons for why git was considered complicated. One of them is that it was complicated. The people who started using git very early on in order to work on the kernel really had to learn a very rough set of scripts to make everything work. All the effort had been on making the core technology work and very little on making it easy or obvious.

People who have only good experiences aren't very interesting. They may be content, and happy after a fashion, but they aren't very deep. It may seem a misfortune now, and it makes things difficult, but well--it's easy to feel all the happy, simple stuff. Not that happiness is necessarily simple. But I don't think you're going to have a life like that, and I think you'll be the better for it. The difficult thing is to not be overwhelmed by the bad patches. You must not let them defeat you. You must see them as a gift--a cruel gift, but a gift nonetheless.