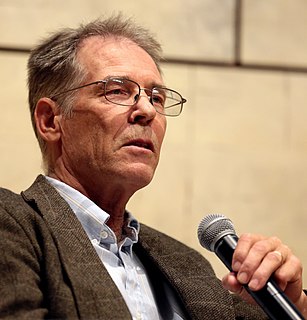

A Quote by Sean M. Carroll

The arrow of time doesn't move forward forever. There's a phase in the history of the universe where you go from low entropy to high entropy. But then, once you reach the locally maximum entropy you can get to, there's no more arrow of time.

Related Quotes

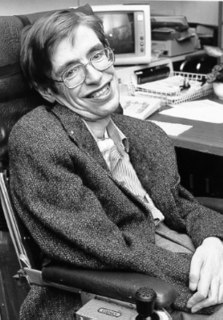

The story of the universe finally comes to an end. For the first time in its life, the universe will be permanent and unchanging. Entropy finally stops increasing because the cosmos cannot get any more disordered. Nothing happens, and it keeps not happening, forever. It's what's known as the heat-death of the universe. An era when the cosmos will remain vast and cold and desolate for the rest of time the arrow of time has simply ceased to exist. It's an inescapable fact of the universe written into the fundamental laws of physics, the entire cosmos will die.

The fact that you can remember yesterday but not tomorrow is because of entropy. The fact that you're always born young and then you grow older, and not the other way around like Benjamin Button - it's all because of entropy. So I think that entropy is underappreciated as something that has a crucial role in how we go through life.

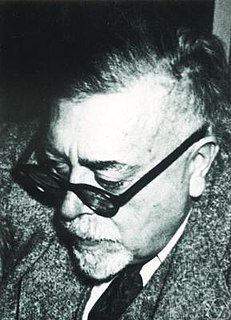

Just as entropy is a measure of disorganization, the information carried by a set of messages is a measure of organization. In fact, it is possible to interpret the information carried by a message as essentially the negative of its entropy, and the negative logarithm of its probability. That is, the more probable the message, the less information it gives. Cliches, for example, are less illuminating than great poems.