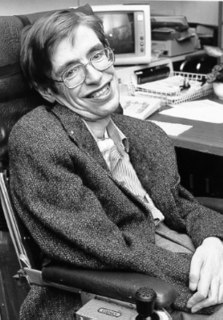

A Quote by Stephen Hawking

Success in creating AI would be the biggest event in human history. Unfortunately, it might also be the last, unless we learn how to avoid the risks.

Related Quotes

Everything that civilisation has to offer is a product of human intelligence; we cannot predict what we might achieve when this intelligence is magnified by the tools that AI may provide, but the eradication of war, disease, and poverty would be high on anyone's list. Success in creating AI would be the biggest event in human history. Unfortunately, it might also be the last.

The advantage of knowing about risks is that we can change our behavior to avoid them. Of course, it is easily observed that to avoid all risks would be impossible; it might entail no flying, no driving, no walking, eating and drinking only healthy foods, and never being touched by sunshine. Even a bath could be dangerous.

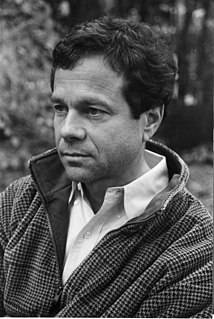

If you're deeply engaged in an event, you're part of it. But if you're outside of it, disinterested, you are the regard that registers history. And that disinterestedness is different from objectivity. The objective view sees only the event, while the disinterested one participates as well as views by creating that link to history. It's a type of viewing that's both inside and out of the event, that brings to the viewing the capacity for human emotion, for compassion, but holds it openly. And objectivity excludes the human element, and is therefore not a point of view open to humans.

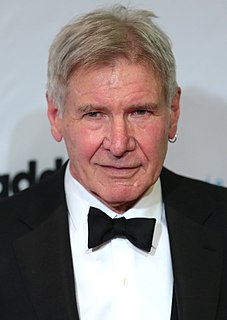

What AI could do is essentially be a power tool that magnifies human intelligence and gives us the ability to move our civilization forward. It might be curing disease, it might be eliminating poverty. Certainly it should include preventing environmental catastrophe. If AI could be instrumental to all those things, then I would feel it was worthwhile.

I think if I were reading to a grandchild, I might read Tolstoy's War and Peace. They would learn about Russia, they would learn about history, they would learn about human nature. They would learn about, "Can the individual make a difference or is it great forces?" Tolstoy is always battling with those large issues. Mostly, a whole world would come alive for them through that book.

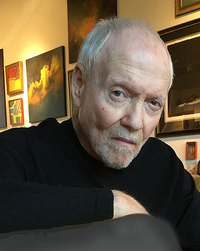

The steep ride up the and down the energy curve is the most abnormal thing that has ever happened in human history. Most of human history is a no-growth situation. Our culture is built on growth and that phase of human history is almost over and we are not prepared for it. Our biggest problem is not the end of our resources. That will be gradual. Our biggest problem is a cultural problem. We don't know how to cope with it.

One of my relatives had been asking me on how he could break into AI. For him to learn AI - deep-learning, technically - a lot of facts exist on the Internet, but it is difficult for someone to go and read the right combination of research papers and find blog posts and YouTube videos and figure out themselves on how to learn deep-learning.

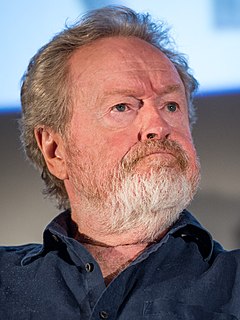

The capacity to create [visual] effects in the computer has made the job easier, but it has also introduced the complexity that you can with a few more keystrokes generate such a busy canvas that the eye doesn't know where to go. You lose human scale on an event and you're just wowed by the kinetics and the visualization. But, often in those cases I feel you lose touch with the human characters and what it is that they would feel and how they might feel, and that's still the most important part.

If Mother Culture were to give an account of human history using these terms, it would go something like this: ' The Leavers were chapter one of human history -- a long and uneventful chapter. Their chapter of human history ended about ten thousand years ago with the birth of agriculture in the Near East. This event marked the beginning of chapter two, the chapter of the Takers. It's true there are still Leavers living in the world, but these are anachronisms, fossils -- people living in the past, people who just don't realize that their chapter of human history is over. '