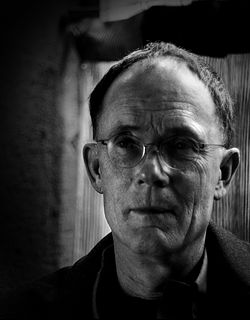

A Quote by William Gibson

A graphic representation of data abstracted from the banks of every computer in the human system. Unthinkable complexity. Lines of light ranged in the nonspace of the mind, clusters and constellations of data. Like city lights, receding.

Related Quotes

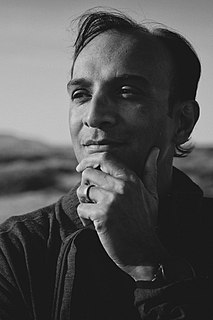

You have to imagine a world in which there's this abundance of data, with all of these connected devices generating tons and tons of data. And you're able to reason over the data with new computer science and make your product and service better. What does your business look like then? That's the question every CEO should be asking.

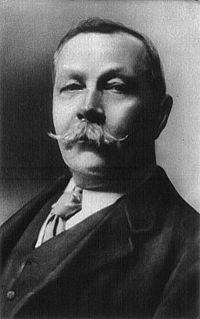

The promoters of big data would like us to believe that behind the lines of code and vast databases lie objective and universal insights into patterns of human behavior, be it consumer spending, criminal or terrorist acts, healthy habits, or employee productivity. But many big-data evangelists avoid taking a hard look at the weaknesses.

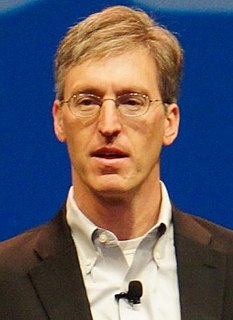

One of the myths about the Internet of Things is that companies have all the data they need, but their real challenge is making sense of it. In reality, the cost of collecting some kinds of data remains too high, the quality of the data isn't always good enough, and it remains difficult to integrate multiple data sources.

Basically, if you want to have a computer system that could pass the Turing test, it as a machine is going to have to be able to self-reference and use its own experience and the sense data that it's taking in to basically create its own understanding of the world and use that as a reference point for all new sense data that's coming in to it.