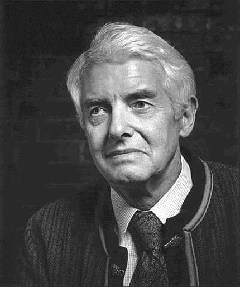

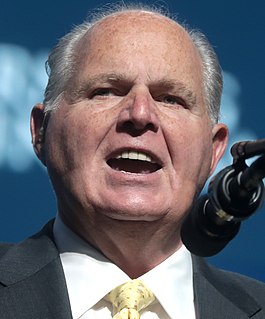

A Quote by Cathy O'Neil

The public trusts big data way too much.

Related Quotes

Let's look at lending, where they're using big data for the credit side. And it's just credit data enhanced, by the way, which we do, too. It's nothing mystical. But they're very good at reducing the pain points. They can underwrite it quicker using - I'm just going to call it big data, for lack of a better term: "Why does it take two weeks? Why can't you do it in 15 minutes?"

As we move into an era in which personal devices are seen as proxies for public needs, we run the risk that already-existing inequities will be further entrenched. Thus, with every big data set, we need to ask which people are excluded. Which places are less visible? What happens if you live in the shadow of big data sets?

MapReduce has become the assembly language for big data processing, and SnapReduce employs sophisticated techniques to compile SnapLogic data integration pipelines into this new big data target language. Applying everything we know about the two worlds of integration and Hadoop, we built our technology to directly fit MapReduce, making the process of connectivity and large scale data integration seamless and simple.