A Quote by Hilary Mason

A lot of people seem to think that data science is just a process of adding up a bunch of data and looking at the results, but that's actually not at all what the process is.

Related Quotes

Computer science only indicates the retrospective omnipotence of our technologies. In other words, an infinite capacity to process data (but only data -- i.e. the already given) and in no sense a new vision. With that science, we are entering an era of exhaustivity, which is also an era of exhaustion.

The USA Freedom Act does not propose that we abandon any and all efforts to analyze telephone data, what we're talking about here is a program that currently contemplates the collection of all data just as a routine matter and the aggregation of all that data in one database. That causes concerns for a lot of people... There's a lot of potential for abuse.

MapReduce has become the assembly language for big data processing, and SnapReduce employs sophisticated techniques to compile SnapLogic data integration pipelines into this new big data target language. Applying everything we know about the two worlds of integration and Hadoop, we built our technology to directly fit MapReduce, making the process of connectivity and large scale data integration seamless and simple.

We are ... led to a somewhat vague distinction between what we may call "hard" data and "soft" data. This distinction is a matter of degree, and must not be pressed; but if not taken too seriously it may help to make the situation clear. I mean by "hard" data those which resist the solvent influence of critical reflection, and by "soft" data those which, under the operation of this process, become to our minds more or less doubtful.

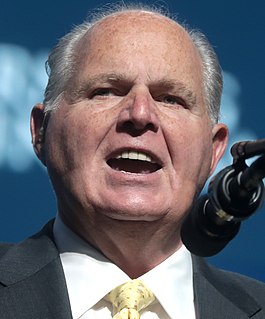

I don't think either party has any idea what's headed their way. Their business is to remain mired in process. They call it deliberation, thoughtful, reasonable deliberation. Trump doesn't know any of that. Trump is not a process guy. To him, process is delay. Process is obfuscation. Process is incompetence. People engaging in process are a bunch of people masking the fact they don't know what they're doing, and he has no time for 'em and no patience.

There's a tendency in graphics to allow the trimming of certain parts. But I think that if you're open about your process, your methodology, such as introducing thresholds, introducing filters, techniques people use in research and data management, it's legitimate. It's legitimate to say, "We're only going to show data above this level, or between levels."

We get more data about people than any other data company gets about people, about anything - and it's not even close. We're looking at what you know, what you don't know, how you learn best. The big difference between us and other big data companies is that we're not ever marketing your data to a third party for any reason.

A lot of environmental and biological science depends on technology to progress. Partly I'm talking about massive server farms that help people crunch genetic data - or atmospheric data. But I also mean the scientific collaborations that the Internet makes possible, where scientists in India and Africa can work with people in Europe and the Americas to come up with solutions to what are, after all, global problems.