Top 1200 Computer Graphics Quotes & Sayings

Explore popular Computer Graphics quotes.

Last updated on April 14, 2025.

There has to be the popcorn genre element, or I don't engage the same way. I like action and vehicle design and guns and computer graphics as much as I like allegory. It's a constant balancing game. I want audiences to be on this rollercoaster that fits the Hollywood mould, but I also want them to absorb my observations.

Hollywood is a special place; a place filled with creative geniuses - actors, screenwriters, directors, sound engineers, computer graphics specialists, lighting experts and so on. Working together, great art happens. But in the end, all artists depend on diverse audiences who can enjoy, be inspired by and support their work.

... what is faked [by the computerization of image-making], of course, is not reality, but photographic reality, reality as seen by the camera lens. In other words, what computer graphics have (almost) achieved is not realism, but rather only photorealism - the ability to fake not our perceptual and bodily experience of reality but only its photographic image.

There are many innovators hard at work seeking to perfect alternatives to meat, milk, and eggs. These food products will, like computer-generated graphics or photography or sound systems, just keep getting better and better until there is little difference between an animal-based protein and a plant-based one, or farm-produced versus cultured meat. That will make it easy for people to make the kinds of choices that will usher in a world with far less violence.

When I use a direct manipulation system whether for text editing, drawing pictures, or creating and playing games I do think of myself not as using a computer but as doing the particular task. The computer is, in effect, invisible. The point cannot be overstressed: make the computer system invisible.

When I first started in the industry, there were - this is prior to the era of computer graphics and all these digital tools - there were some pretty rigid, technologically imposed limitations about how you shoot things, because if you didn't shoot 'em the right way, you couldn't make the shot work.

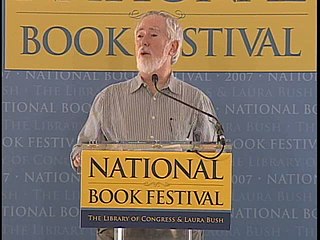

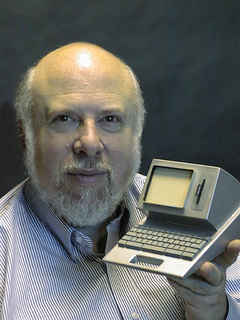

I was asking questions which nobody else had asked before, because nobody else had actually looked at certain structures. Therefore, as I will tell, the advent of the computer, not as a computer but as a drawing machine, was for me a major event in my life. That's why I was motivated to participate in the birth of computer graphics, because for me computer graphics was a way of extending my hand, extending it and being able to draw things which my hand by itself, and the hands of nobody else before, would not have been able to represent.

If you need to do a movie where you have an army of 10,000 soldiers, that's a very difficult thing to shoot for real. It's very expensive, but as computer graphics techniques make that cheaper, it'll be more possible to make pictures on an epic scale, which we haven't really seen since the '50s and '60s.

We're also looking a lot at graphics and video. We've done a lot on a deep technical level to make sure that the next version of Firefox will have all sorts of new graphics capabilities. And the move from audio to video is just exploding. So those areas in particular, mobile and graphics and video, are really important to making the Web today and tomorrow as open as it can be.

Until I reached my late teens, there was not enough money for luxuries - a holiday, a car, or a computer. I learned how to program a computer, in fact, by reading a book. I used to write down programs in a notebook and a few years later when we were able to buy a computer, I typed in my programs to see if they worked. They did. I was lucky.

I've never been much of a computer guy at least in terms of playing with computers. Actually until I was about 11 I didn't use a computer for preparing for games at all. Now, obviously, the computer is an important tool for me preparing for my games. I analyze when I'm on the computer, either my games or my opponents. But mostly my own.

I always loved both 'Breakout' and 'Asteroids' - I thought they were really good games. There was another game called 'Tempest' that I thought was really cool, and it represented a really hard technology. It's probably one of the only colour-vector screens that was used in the computer graphics field at that time.

With all the hype that computer graphics has been getting, everybody thinks there's nothing better than CGI, but I do get a lot of fan mail saying they prefer our films to anything with CGI in it. I'm grateful for that, and we made them on tight budgets, so they were considered B-pictures because of that. And, now here we are, and they've outlasted many so-called A-pictures.

What is the central core of the subject [computer science]? What is it that distinguishes it from the separate subjects with which it is related? What is the linking thread which gathers these disparate branches into a single discipline. My answer to these questions is simple -it is the art of programming a computer. It is the art of designing efficient and elegant methods of getting a computer to solve problems, theoretical or practical, small or large, simple or complex. It is the art of translating this design into an effective and accurate computer program.

The attribution of intelligence to machines, crowds of fragments, or other nerd deities obscures more than it illuminates. When people are told that a computer is intelligent, they become prone to changing themselves in order to make the computer appear to work better, instead of demanding that the computer be changed to become more useful.

The original AMD GCN architecture allowed for one source of graphics commands, and two sources of compute commands. For PS4, we've worked with AMD to increase the limit to 64 sources of compute commands - the idea is if you have some asynchronous compute you want to perform, you put commands in one of these 64 queues, and then there are multiple levels of arbitration in the hardware to determine what runs, how it runs, and when it runs, alongside the graphics that's in the system.

What, then, is the basic difference between today's computer and an intelligent being? It is that the computer can be made to seebut not to perceive. What matters here is not that the computer is without consciousness but that thus far it is incapable of the spontaneous grasp of pattern--a capacity essential to perception and intelligence.