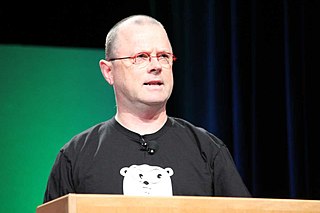

A Quote by Robert Love

The key to a solid foundation in data structures and algorithms is not an exhaustive survey of every conceivable data structure and its subforms, with memorization of each's Big-O value and amortized cost.

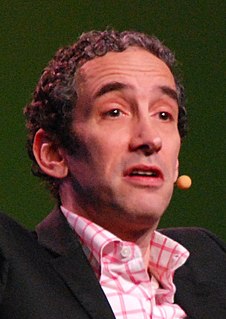

Related Quotes

One of the myths about the Internet of Things is that companies have all the data they need, but their real challenge is making sense of it. In reality, the cost of collecting some kinds of data remains too high, the quality of the data isn't always good enough, and it remains difficult to integrate multiple data sources.

MapReduce has become the assembly language for big data processing, and SnapReduce employs sophisticated techniques to compile SnapLogic data integration pipelines into this new big data target language. Applying everything we know about the two worlds of integration and Hadoop, we built our technology to directly fit MapReduce, making the process of connectivity and large scale data integration seamless and simple.